In this section, the introduction of a novel algorithm, named Nonlinear Activated Beetle Antennae Search Egret Swarm Optimization Algorithm (NBESOA), is presented. This algorithm integrates the strategies of NABAS and ESOA. The fundamental principles of ESOA and NABAS are initially outlined. Following this, the limitations inherent in ESOA are analyzed, and enhancements are proposed through the incorporation of NABAS strategies.

Basic egret swarm optimization algorithm

The ESOA process can be segmented into two distinct parts. The first part, encompassing the Sit-and-Wait and Aggressive strategies, demonstrates the cooperative prey-searching behaviour of an egret team within a solution space. Subsequently, the second part involves updating the search outcomes of each team, guided by specific discriminant conditions.

Searching strategy

In a team, each egret fulfills specific duties. Under the Sit-and-Wait strategy, Egret A adheres to a defined standard of behavior, which can be represented by an estimated tangent plane \(\mathcal {A}(*)\), while the actual value of the objective function is culculated by \(\mathbb {F}(*)\). Assume the position of the i-th egret in the team is \({\varvec{x}}_i \in \mathbb {R}^n\), where n is the dimension of this unresolved problem and this position carries a weight \({\varvec{\omega }}_i\) in the evaluation method. At the current position, the prey’s estimated value is parameterized as

$$\begin{aligned} {{\tilde{y}}}_i = \mathcal {A}({\varvec{x}}_i) = {\varvec{\omega }_i}{\varvec{x}}_i. \end{aligned}$$

However, Egret A’s judgment criteria have a deviation \(\mathcal {E} _i = {\Vert {{{\tilde{y}}}_i – {y_i}} \Vert }^2 / 2\) from the actual value of the prey, where \(y_i = \mathbb {F}({\varvec{x}}_i)\) is the value calculated by the objective function. To adjust this deviation, Egret A reorients in the direction \({\varvec{\tilde{d}}}_{i}\) guided by the practical gradient \(\varvec{\tilde{g}}_i = {\partial {\mathcal {E} _i}}/{\partial {{\varvec{\omega }} _i}}\), which is defined as

$$\begin{aligned} {\varvec{\tilde{d}}}_{i} = {\varvec{\tilde{g}}_i} / {|| {\varvec{\tilde{g}}_i} ||}. \end{aligned}$$

(12)

Besides judging based on the current situation and adjusting the direction, Egret A also relies on the historical hunting experience of its team and the entire group. Assuming the team and the group currently have information about the optimal locations of prey \({\varvec{x}}_\text {hbest}\)and \({\varvec{x}}_\text {gbest}\), the best fitness values \(y_\text {hbest}\) and\(y_\text {gbest}\), as well as the best correction directions \({\varvec{d}}_\text {hbest}\) and \({\varvec{d}}_\text {gbest}\). Based on the information from the team and the group, the direction corrections for Egret A at its current location can be calculated as follows

$$\begin{aligned} {\varvec{{\tilde{d}}}_{h,i}}= & \frac{({\varvec{x}}_\text {hbest} – {\varvec{x}}_i) \cdot (y_\text {hbest} – y_i)}{|| {{\varvec{x}}_\text {hbest}} – {\varvec{x}}_i ||^2} + {\varvec{d}}_\text {hbest}, \end{aligned}$$

(13)

$$\begin{aligned} {\varvec{{\tilde{d}}}_{g,i}}= & \frac{({\varvec{x}}_\text {gbest} – {\varvec{x}}_i) \cdot (y_\text {gbest} – y_i)}{|| {{\varvec{x}}_\text {gbest}} – {\varvec{x}}_i ||^2} + {\varvec{d}}_\text {gbest}, \end{aligned}$$

(14)

where, \({\varvec{{\tilde{d}}}_{h,i}}\) is the revised direction influenced by the team of Egret, while \({\varvec{{\tilde{d}}}_{g,i}}\) corresponds to the group. The combination of the (12) to (14) is represented as an integrated gradient, which is denoted as

$$\begin{aligned} {{\varvec{g}}_i} = ( 1 – {k_h} – {k_g} ) \cdot {\varvec{{\tilde{d}}}_i} + {k_h} \cdot {\varvec{{\tilde{d}}}_{h,i}} + {k_g} \cdot {\varvec{{\tilde{d}}}_{g,i}}, \end{aligned}$$

(15)

where \(k_h \in [0,0.5)\) and \(k_g \in [0,0.5)\). Thus, Egret A can move to the next position \({\varvec{x}}_{A,i}\) according to its judgement,

$$\begin{aligned} {\varvec{x}}_{A,i} = {\varvec{x}}_i + step_A \cdot \exp (-l / (-1 \cdot l_{\max })) \cdot h \cdot {{\varvec{g}}_i}, \end{aligned}$$

(16)

where \(step_A \in (0,1]\) is the scalar quantity that controls the distance the Egret A moves, and h represents the gap between the up bound and with low bound. Additionally, l is the iteration count while \(l_{\max }\) represents l gets maximum.

In the Aggressive strategy, Egret B freely conducts random searches throughout the entire solution space. This behavior is influenced by the random number \(k_{B,i} \in (-\pi /2,\pi /2)\), which can be represented as

$$\begin{aligned} {\varvec{x}}_{B,i} = {\varvec{x}}_i + step_B \cdot {\tan ( k_{B,i} ) \cdot h} /(l+1). \end{aligned}$$

(17)

There is a factor \(step_B \in (0,1]\) that controls the movement of Egret B. Unlike other members of the egret team, Egret B’s search for prey depends solely on itself.

As another aspect of the Aggressive strategy, Egret C appears to lack independent judgment. It prefers to pursue prey based on collaborative experience and adopts the encircling mechanism, which is described by

$$\begin{aligned} \begin{aligned} {\varvec{x}}_{C,i} = \,&( {1 – {k_m} – k_n} ) \cdot {\varvec{ x}_i} + {k_m} \cdot ({\varvec{x}}_\text {hbest} – {\varvec{x}}_i) + {k_n} \cdot ({\varvec{x}}_\text {gbest} – {\varvec{x}}_i), \end{aligned} \end{aligned}$$

(18)

where \(k_n\) and \(k_m\) are both random numbers in [0, 0.5).

Updating strategy

Egret A, Egret B, and Egret C provide their own opinions in (16)–(18) on team position updates, which can be combined into a solution matrix denoted to

$$\begin{aligned} {\varvec{x}}_{I,i} = [ {{\varvec{x}}_{A,i},{\varvec{x}}_{B,i},{\varvec{x}}_{C,i}} ]. \end{aligned}$$

(19)

Subsequently, according to (19), an optimal solution can be obtained:

$$\begin{aligned} {\varvec{y}}_{I,i} = [ {{y_{A,i}},{y_{B,i}},{y_{C,i}}} ]. \end{aligned}$$

(20)

Define \(y_{min,i}\) as the minimum value of the total egret group in \({\varvec{y}}_{I,i}\) in the i-th iteration, the updated position can be determined by

$$\begin{aligned} {\varvec{x}}_{i+1} = \left\{ \begin{array}{l} {\varvec{x}}_{I,i}~~~~\text {if}~~y_{min,i}

(21)

with the random number \(r \in (0,1)\), even if the Egret team does not find a better position in the ith iteration, it still has a \(30\%\) chance of jumping out of the current position. The flowchart of ESOA is shown in Fig. 2.

Nonlinear activated beetle antennae search algorithm

Unlike the egret group, which works in concert between individuals and the group during hunting, the BAS algorithm has only one particle in the search space, which relies entirely on individual searching ability. Each beetle is equipped with two antennae that hunt by collecting odour as a tool. Based on the comparative results collected from the antennae, the beetles decide their next location to move to. If the right antenna receives a higher concentration of odour than the left, it indicates that the food is on the right side, and vice versa. The search behavior of the left and right antennae for food is defined as follows,

$$\begin{aligned} {\varvec{x}_{R,i}} = {\varvec{x}_i} + \zeta _i \cdot {\varvec{b}} \textit{,} \quad {\varvec{x}_{L,i}} = {\varvec{x}_i} – \zeta _i \cdot {\varvec{b}}, \end{aligned}$$

where the right-side position \(\varvec{x}_{R,i}\) and the left-side position \(\varvec{x}_{L,i}\) are controlled by the length of antennae \(\zeta _i\) and a random direction vector \(\varvec{b}\). The concentration difference of food odour between the left and right position, represented as \(\Delta \mathbb {F}\), influences the outcome of the signum function \(\mathrm sgn(*)\). Furthermore, the beetle’s next step in the search space is given by

$$\begin{aligned} {\varvec{x}_{i+1}} = {\varvec{x}_i} \pm \psi _i \cdot {\varvec{b}} \cdot {\mathop {\textrm{sgn}}} ( \Delta \mathbb {F}) = {\varvec{x}_i} \pm \psi _i \cdot {\varvec{b}} \cdot {\mathop {\textrm{sgn}}}( \mathbb {F}({\varvec{x}_{R,i}}) – \mathbb {F}({\varvec{x}_{L,i}})), \end{aligned}$$

(22)

where \(\lambda _i\) is the step factor controlling the convergence speed of BAS. The symbol ‘±’ appearing in (22) changes according to the type of optimization problem that BAS is solving. If BAS is applied to solve a minimization problem, ‘−’ should be used in (22), while ‘\(+\)’ is used for maximization problems.

After each iteration, both \(\zeta\) and \(\psi\) are updated to adapt to the gradually decreasing search area as the number of iterations increases. The specific update rules are as follows:

$$\begin{aligned} \begin{aligned}&\zeta _{i + 1} =0.95\zeta _i + 0.01,\\&\psi _{i + 1} = 0.95\psi _i. \end{aligned} \end{aligned}$$

(23)

However, this update rule may result in the BAS not effectively exploring the search space on certain gradient values, leading to premature convergence. To overcome this issue, a nonlinear activated factor \(\mu\) is introduced into the BAS algorithm to control the update speed of the step size factors44. The new update rule is

$$\begin{aligned} \begin{aligned}&\mu _{i+1} = \eta _i\psi _i\mu _i + (1-\eta _i)\mu _i,\\&\zeta _{i + 1} =\eta _i(0.95\zeta _i + 0.01)+(1-\eta _i)\zeta _i,\\&\psi _{i + 1} = 0.95 \psi _i \eta _i +(1-\eta _i)\psi _i, \end{aligned} \end{aligned}$$

(24)

where \(\eta _i\) represents a decision factor that is influenced by the comparison of \(\Delta \mathbb {F}\) and \(\mu _i\). The definition of \(\eta _i\) is

$$\begin{aligned} \eta _i = \left\{ \begin{array}{l} 1, \quad \text {if} ~~|\Delta \mathbb {F}| \le \mu _i\\ 0, \quad \text {else}. \end{array} \right. \end{aligned}$$

(25)

Under this rule, the particle that is far from the target continues to conduct wide-range searches, and only when it approaches the target within a certain range will lead \(\zeta\) and \(\psi\) to be updated.

NABAS strategy improving egret swarm optimization algorithm

From the analysis of (12)–(18), it is revealed that, within ESOA, the philosophies of Egret A employing the Sit-and-Wait strategy and Egret B and C using the Aggressive strategy significantly diverge.

In (15), the determinants of Egret A’s positional update encompass three elements: the pseudo gradient of weight in the observation equation \(\mathcal {A}(*)\), the team’s historical experience, and the group’s collective experience. The two factors based on historical experience make (15) the only part in ESOA influenced by the fitness function. However, in (15), the two stochastic numbers \(k_h\) and \(k_g\) can significantly impact the trajectory of Egret A, potentially leading to two extreme scenarios:

(1) When \(k_h\) and \(k_g\) both approach 0.5, Egret A may lose its own judgment, becoming entirely influenced by other members of the population, leading to premature convergence.

(2) In contrast, when both \(k_h\) and \(k_g\) asymptotically approach 0, Egret A’s behavior becomes exclusively reliant on its observational modality, precluding the team from optimizing actions based on fitness function outcomes, thereby resulting in a loss of directional focus.

Furthermore, while Egret B possesses a global perspective, potentially accruing enhanced benefits for the group, it concurrently incurs an increased energy expenditure. Conversely, the strategy of Egret C, predominantly influenced by historical experience, involves proximal circumnavigation and exploration, a method that is prone to entrap the group in local optima.

To address the aforementioned shortcomings of ESOA, a particle \({\varvec{x}}_{S,i}\) embedded with the NABAS strategy is introduced into the ESOA group. This particle conducts a fitness function search in the vicinity of its current position during each iteration, effectively resolving the issue where ESOA deviates from practical problems in extreme cases and reducing dependency on random numbers. Additionally, the introduction of an activation factor adaptively controls the update speed of the step size, and in comparison with Egret B, it reduces energy expenditure. Therefore, the updating strategies (19) and (20) of NBESOA are modified like below:

$$\begin{aligned} {\varvec{x}}_{I,i}= & [ {{\varvec{x}}_{A,i},{\varvec{x}}_{B,i},{\varvec{x}}_{C,i}},{\varvec{x}}_{S,i} ], \end{aligned}$$

(26)

$$\begin{aligned} {\varvec{y}}_{I,i}= & [ {y_{A,i}},{y_{B,i}},{y_{C,i}}, {y_{S,i}} ]. \end{aligned}$$

(27)

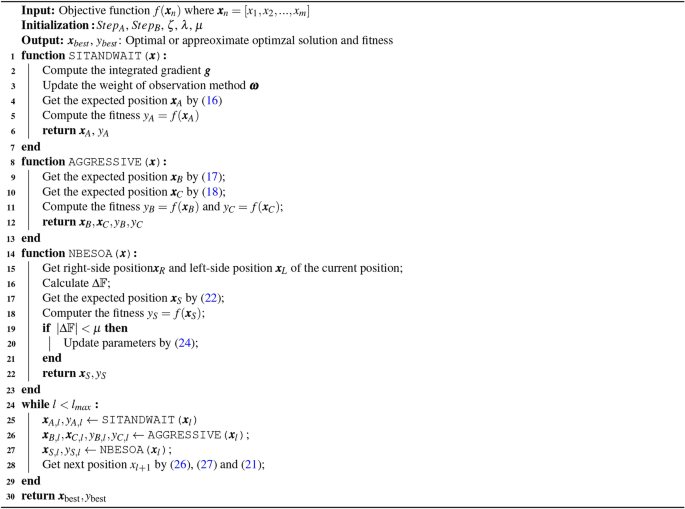

According to the best value in (27), NBESOA still updates the optimal position by (21). The pseudo code of NBESOA is shown in Algorithm 1.

Complexity analysis

For clarity of expression, we denote the maximum number of iterations in Algorithm 1 as K, and the population size of the NBESOA as M. Assuming that each statement in the NBESOA consumes one unit of time and that, apart from a single while loop for iteration, there are no loops within the functions “SITANDWAIT”, “AGGRESSIVE”, and “NABAS,” thus, these parts have a constant time complexity, denoted as O(1) . Consequently, the time complexity of the NBESOA is determined by the maximum number of iterations of the while loop, amounting to O(K) .

Regarding space complexity, it is necessary to account for all the data structures utilized within the algorithm. When the dimension of the optimization problem is N, the variables within NBESOA require storage for a matrix of dimensions \(K \times N\) at maximum. Hence, the space complexity of the NBESOA is O(KN) .

Convergence performance of the improved ESOA

Four common test functions are selected to assess the convergence efficacy of NBESOA. These functions are Drop-wave, Schaffer N.2, Levy N.13, and Rastrigin, detailed in Table 3.

Notably, each function is multimodal, non-convex, continuous, and possesses a singular global minimum within its solution space. To standardize the experimental results, we adjusted the original Drop-wave function by incrementing its cardinality by one, given its global optimum of 0. With their numerous local minima, these functions serve as an apt testbed for evaluating NBESOA’s proficiency in escaping local optima. We conducted 20 trials for each function using NBESOA, and the outcomes are presented in Fig. 3. Figure 3a illustrates the global convergence of NBESOA, demonstrating its rapid convergence to the optimal value within 200 iterations. Additionally, Fig. 3b displays a box plot of the 20 convergence outcomes, highlighting result variability. It is evident that NBESOA consistently converged to the global optimum in three of the functions across all trials, while the variance for the Levy N.13 function was within \(10^{-9}\), indicating minimal fluctuation in the algorithm’s performance.